Tech, Tech,

Boom

Stories by Sari Harrar

Photos by Adam Hemingway

Around the world, researchers are exploring the resounding possibilities of machine learning and assistive technologies. At Chapman University, those explorations include a special focus on creativity and addressing unmet needs.

Rhapsody in Blue, Green, Yellow…

Concert pianist and professor Grace Fong, graduate student Rao Ali ’18 (Ph.D. ’22) and computer science professor Erik Linstead ’01 use tools of machine learning to translate classical music compositions into colorful “moving paintings.”

Concert pianist and Professor Grace Fong, graduate student Rao Ali ’18 (Ph.D. ’22) and computer science professor Erik Linstead

Project Metamorphosis taps machine learning to transform classical music into visual art.

Arpeggios spiral. Brushstrokes in blue, green, yellow and red bend, sway and swoop. High notes glimmer and deep bass tones bloom. Without a sound, the music of Mozart, Bach and Debussy unfolds as images on a screen.

It’s all part of an innovative collaboration between Chapman University prize-winning concert pianist Grace Fong, Associate Professor of Computer Science Erik Linstead and graduate student Rao Ali ’18 (Ph.D. ’22).

The project translates iconic piano compositions into swirling, colorful and dynamic “moving paintings.”

The cross-disciplinary Project Metamorphosis takes a deep dive into machine learning, early 20th-century music theory and an algorithm called Perlin Noise that’s used in commercial films and video games for natural-looking effects.

The result: Video versions of familiar classical works. Without a sound, the music unfolds as images on a screen.

“In the future, this system could be used to interpret music into an aesthetic experience for people with hearing impairments and hearing loss,” says Ali, a Chapman Ph.D. candidate in computational and data sciences. The translation could be via a computer app that translates recordings for small screens or for display on large video screens at live concerts, he says.

says Fong, a professor and director of Piano Studies in Chapman’s College of Performing Arts, Hall-Musco Conservatory of Music. Her previous collaborations have involved dancers, painters, film directors, even Michelin-star chefs, but never computer scientists.

“This has shifted my view to understand the harmony where machines are extensions of humans through reciprocal communication,” she says. “Chapman is the type of place that provides the support and resources to make it possible for these types of projects to materialize.”

Fong, Linstead and Ali published a paper in August 2021 in the leading arts and technology journal Leonardo outlining their shared journey. “I have no background in music, though I do enjoy listening to classical pieces,” says Ali. “Working with Dr. Fong helped me get closer and closer to an aesthetic interpretation of music as visual art.”

In addition to drawing on advanced computational techniques, Ali reached back to a circa-1919 theory called Marcotones that assigns colors to musical notes, such as red for the note C and green for F sharp.

It was just the starting point. Ali worked closely with Fong and Linstead to add nuance, such as making colors darker for passages in minor keys and lighter for major keys. When Fong said she imagines arpeggios as spirals, Ali wrote code that interprets these rippling, broken chords as swirls of color. He used the Perlin Noise algorithm to produce curving, naturalistic brushstrokes, too.

“I didn’t want jagged, straight lines,” he says.

Collaboration across disciplines helped ensure that the colors and shapes in the videos are systematic and meaningful.

Grace Fong, acclaimed concert pianist and director of Piano Studies at Chapman, worked with graduate student Rao Ali on Project Metamorphosis.

Ali is now working on a new project that uses similar techniques to translate paintings into music.

“It’s one thing to take a piano composition and just produce colors on a screen, another to make it systematic and meaningful as Rao has done,” says Linstead, associate dean at Fowler School of Engineering and principal investigator of Chapman’s Machine Learning and Affiliated Technologies (MLAT) Lab. “We wanted a mathematical, computational and musical foundation for the project so that it could be used to produce visuals of any number of musical compositions.

Every step of the way, when the machine learning team explored something new through algorithms, they would send the work to Fong for her artistic input and advice.

“The best machine learning is done when you bring together people from different disciplines like this,” Linstead said.

Senses of Adventure

Research opens doors to high-tech, high-touch worlds.

A smartphone app features visuals that support neurodiverse learning.

Creating BendableSound, Professor Franceli Cibrian designs with a vision: “These kids deserve to have fun.”

Push it, pull it, twist it! The stretchy fabric panel set up in a school in Tijuana, Mexico, for children with severe autism seemed magical: Tap it and a glowing blue galaxy emerged. Slide your hand across it and piano music played. But “BendableSound” is more than a game. It’s a high-tech, high-touch music therapy intervention designed by Franceli Cibrian to build motor skills in neurodiverse kids.

“BendableSound and a smartphone version we designed during the pandemic support development by using several senses at the same time,” says Cibrian, an assistant professor in Chapman University’s Fowler School of Engineering. “The music, visuals and movements of the children all match up. For instance, if you push harder on the fabric, the volume of the music increases. This makes it easier for the brain to process the experience and control movements.”

Cibrian fell in love with human-computer interaction as a graduate student at the Ensenada Center for Scientific Research and Higher Education in Mexico.

she says. “It can have huge benefits for children and teens with neurodiversity.”

Today, her research at Chapman focuses on the design, development and evaluation of ubiquitous interactive technology to support child development. Her projects, often in collaboration with experts from other institutions, get results.

Professor Franceli Cibrian

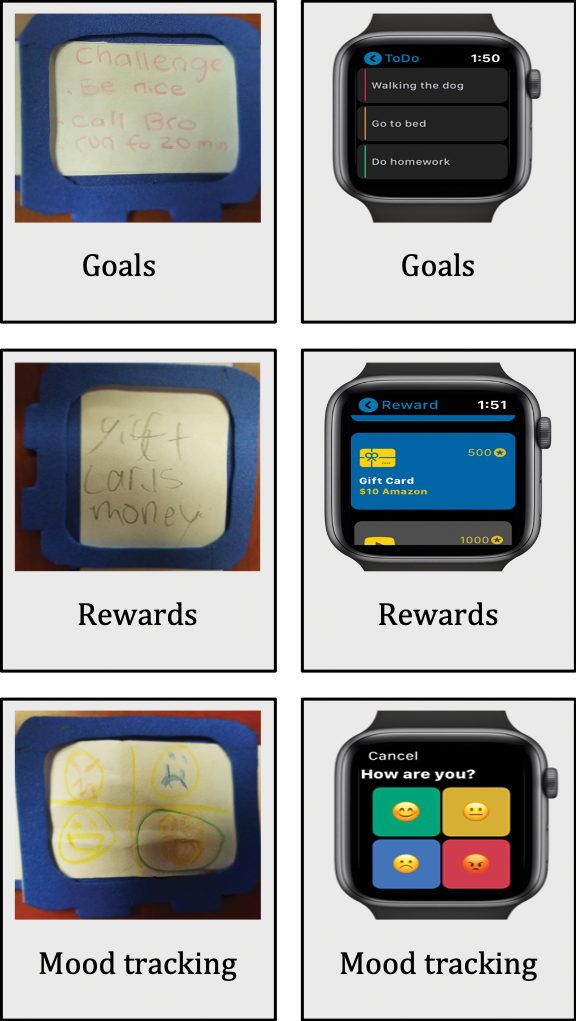

A smartwatch app called CoolCraig helps youngsters with attention deficit hyperactivity disorder and their parents establish goals and rewards.

BendableSound worked as well or better than traditional music therapy for improving strength, coordination and reaction times in a 2020 study of 22 children, ages 4 to 8, with autism. An exercise game called Circus in Motion that adjusts to a child’s abilities increased the amount of activity kids got compared to traditional vestibular system exercises for balance and coordination, according to a 2021 study.

In a preliminary 2020 report, a smartwatch app called CoolCraig, developed and tested with Cibrian’s colleagues at UC Riverside and UC Irvine, was well received by pre-teens and teens with attention deficit hyperactivity disorder and their parents. More research is planned. Parents and kids use CoolCraig together, setting goals for activities and behaviors and deciding on rewards. A smartwatch app provides reminder notifications to kids, while a smartphone app lets parents monitor and support them.

The goals are serious. The big bonus? This assistive tech is really fun.

“These kids deserve to have fun,” Cibrian says. “One of my favorite examples is little kids using their whole bodies – their heads, their backs – as they explore the elastic fabric of BendableSound. They’re learning to control their bodies and having a good time as they do it.”

The Science of Touch

Sensory tools like a haptic sleeve provide new avenues to teaching and experience.

Professor Dhanya Nair’s HEART Lab uses haptics to make refreshable Braille displays more accessible.

What if a tiny, low-cost Braille display could fit on a smartphone? Inside the HEART Lab at Chapman University’s Fowler School of Engineering, Dhanya Nair is harnessing the science of touch to make such assistive technologies widely accessible and easily portable.

With a grant from the National Science Foundation, Nair will soon test a refreshable Braille display that uses an array of rounded pins instead of dots on a page to create an ever-changing stream of information, two to three Braille characters at a time. She envisions the device fitting on the case of a smartphone or computer tablet for instant translation of messages, search results and even touchable versions of graphics, emoticons and map directions for the sight impaired.

“Refreshable Braille displays on the market now are larger and can cost thousands of dollars,” says Nair, an assistant professor of engineering at Chapman.

Professor Dhanya Nair

Another of her ongoing research projects is the “haptic sleeve,” a fabric sleeve with four motors that deliver vibrations to the forearm. “We are testing whether the vibrations help train hand movements,” Nair says. “Right now we’re studying it with handwriting in healthy people. The hope is to use it in the future for rehabilitation for motor disabilities such as after a stroke, when some people lose the ability to control their hands and have to re-learn how to write.”

A haptic sleeve study will launch this summer, looking at the best way to use vibrations to direct movements for making cursive letters.

Ever curious about the science of touch, Nair has added a twist called Tactile Music. “I want to know whether you can re-create the experience of listening to music as vibrations, such as for the hearing-impaired,” she says. “We’ll translate instrumental music that’s classified as happy or sad or calm into vibrations, play them using the sleeve and ask study participants which emotions they relate to it.”

This summer’s study, in potential community partnership with the Braille Institute Anaheim Center and Beyond Blindness of Santa Ana, will fine-tune the system by testing whether subtle vibrations or a sideways motion of the pins make the characters more legible.

“Braille is read by moving your fingers across it, not by pressing down on one character,” she says. “We are adding movement to simulate that sensation.”

The name of Nair’s lab – an acronym that stands for Haptic Educational Assistive & Rehabilitation Technology – refers not just to Nair’s focus on haptics (technology that produces the experience of touch) but also to her passion for using it to fill unmet human needs.